Exponential Moving Average, Volatility Pockets & Improved Correlation Studies

I apologize in advance for those of you who aren’t quite interested in the math, but I have had this stuck in my head for almost a week now and can’t get rid of it. To be honest, I probably shouldn’t write about this because it is such an integral part of so many future Virante projects, but here goes…

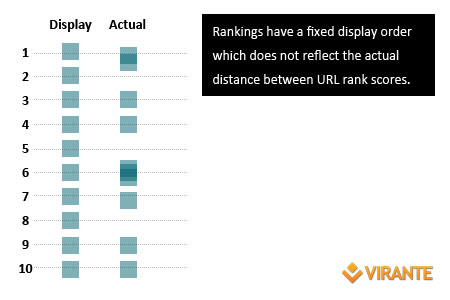

The Deception of Integer Rankings

A key problem with much of SEO is that rank order is deceptive. We perceive sites as positions 1 through 10, each with a fixed integer position when, in reality, their proximity to one another in terms of actual relationship-to-the-query scores can vary greatly. It is this hidden, underlying score that is key to many SEO functions – keyword competitiveness and ranking factor determination to name two. Below, I discuss a simple, algorithm-agnostic method to determine relative rank gaps and then explain a few key uses of this information.

Exponentially Weighted Moving Average

While search results are ordered 1-10 and have a single integer value each day, over time this position can and will fluctuate as ranking factors are updated and competitors make changes. This fluctuation is represented in the aggregate in systems like SEOMoz MozCast or SerpMetrics Flux. Sometimes we will look at an individual keyword to determine a specific SERP’s volatility but in this case, we will instead look at individual URLs within each keyword SERP over time to determine an average ranking.

As you can imagine, it would be silly to just average the last 30 days of rankings for an individual URL on any given keyword. Doing so would ignore the trajectory of the URL (tending upwards or downwards) and, if that time period included a major update, could have remarkably high variance. Instead, we look to a simple technique used in stock market volatility forecasting called Exponentially Weighted Moving Average (EWMA).

I’ll give a terse explanation here, but please take some time to read up a little more. When you average something over time, you can often assume that the most recent data is more valuable that the oldest data. Yesterday’s rankings are probably a better predictor than your rankings 30 days ago of how your site will perform tomorrow. Instead of averaging all days of rankings data together, we weight them based on recency. The most simple method to accomplish this is a rolling average where each day you find the mean of today’s value and all previous day’s means.

Let’s say you rank #1 today and yesterday you ranked #2. Using EWMA, we would average today and yesterday and come up with 1.5. Another day elapses and you are still at #1. Instead of averaging, #1, #1, and #2, you would simply average today’s rank (#1) with yesterday’s average (#1.5). Your new EMWA is #1.25. In this regard, each new day is worth 50% of the weight in the average, and all previous days are worth a combined 50%.

The magic, of course, is in choosing that weight. Maybe an exponential weight is too strong, and today’s ranking should only matter 1/3. Or maybe we should vary that weight based on a second rolling metric itself, like the degree of change, or a separate weighted variance. (or, most importantly, a weight that preserves rank order) While worth thinking about, let’s go ahead and jump to the good stuff.

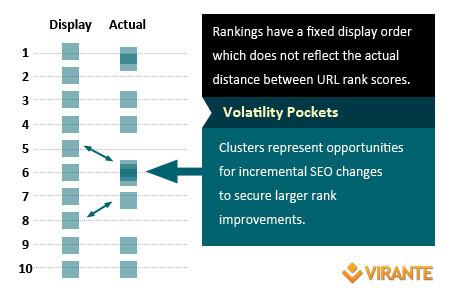

Finding Volatility Pockets for Keyword Competitiveness

Let’s say we have now used an EWMA to determine an average rank rather than displayed rank. In the image above, you can see the overlaps that this can create. The first ranking URL might normally rank #1 6 out of 7 days. However, approximately 1 time a week, the #2 spot over takes them. URLs ranking #3 and #4 almost never transpose with one another, and 4 never cedes its position to #5.

However, #5, #6, and #7 quite regularly interchange with one another. And your site currently ranks #8. You haven’t dropped back to 9 in over a month, but your rarely shuffle to #7. What we have done here is used the EWMA as a proxy for competitor’s relative rank score. In this example, because #5, #6, and #7 are all similarly scored, there is a pocket of volatility surrounding them. Because our example site is sitting directly behind this pocket, we have reason to believe that an incremental improvement in value could precipitate a 3 position jump in the SERPs. Of course, we would need to further this analysis with a look at exactly what kind of investment that might entail, but we have a clean, easy to calculate opportunity from which to begin our analysis.

Finding volatility pockets like this that sit around positions #3, #4, and #5 can be particularly valuable because a jump from below the fold to above the fold can yield strong CTR increases from the search results.

Improving Correlation Studies

This one really gets my blood going because of the opportunity it presents for better aggregate studies of rank factors. Some of you might know that a few years back with the help of many including Authority Labs, Majestic SEO, and SEOMoz, we ran a correlation study with over 1,000,000 keywords. This produced many innovations at Virante including one we still use regularly today, relevancy modified MozRank.

One of the biggest issues we run into with the correlation study is that the dependent variable has been tampered with by Google. Instead of giving us the actual rank score, we see the 1 through 10 integer rank. #5 might literally be 100x more relevant to the query than #6, and #6 is only .000000001x better than #7. If we take a snapshot of rankings on just 1 day, we can’t model this out. We are stuck with a fixed, stair-step dependent variable.

But, if we use an exponentially weighted moving average, we can help solve several issues…

- We can handle the issue of lagging independent variables (like slightly out-dated link data)

- We can, to some degree, transition rank from discrete to continuous variable

- We can establish a cohort of solid, unchanging rankings to analyze.

More with Math

The Art vs. Science debate continues on in the SEO community. I don’t think that it is an unhealthy argument, but I do want to make sure that we always stay grounded in math and science. These tell us what matters and in what degree, it helps us determine measurable goals. Art is the method we use to meet those goals.

2 Comments

Trackbacks/Pingbacks

- SearchCap: The Day In Search, November 12, 2012 - [...] Exponential Moving Average, Volatility Pockets Improved Correlation Studies, The Google Cache [...]

- SearchCap: The Day In Search, November 12, 2012 | Search Engine Marketing & Website Optimization - [...] Exponential Moving Average, Volatility Pockets Improved Correlation Studies, The Google Cache [...]

- Biomagnification, Redirects and Back Link Penalties | The Google Cache: Search Engine Marketing, SEO & PPC - [...] While these are regularly computer sciences (like latent dirichlet allocation) or mathematics (like volatility analysis), we sometimes find interesting…

Really interesting way of looking at ranking data. Thanks for putting the idea out.

I never thought I’d hear the expression exponential smoothing (exponential moving averages) in SEO – which is also used to forecast demand for supply chain problems. Thanks for introducing the idea of volatility pockets – the visualization was especially helpful.

IMO, our SEO science is only as well-grounded as our data source, which explains the prevalence of most SEO studies are correlational.