Second Page Poaching – Advanced White Hat SEO Techniques

It is time that someone put Quadzilla in his place. Don’t get me wrong, I think there is a huge amount of innovation in the black hat and gray hat industries that simply is too risky for white-hatters to discover. Nevertheless, it is ridiculous to claim that white hat techniques have become so uniform and ubiquitous in their application that nothing truly “advanced” continues to exist. In today’s post I am going to talk about a technique with which many of you will not be familiar. Here at Virante we call it Second Page Poaching. But, before we begin, let me start with a brief explanation of what I believe to be “advanced white-hat SEO”.

It is time that someone put Quadzilla in his place. Don’t get me wrong, I think there is a huge amount of innovation in the black hat and gray hat industries that simply is too risky for white-hatters to discover. Nevertheless, it is ridiculous to claim that white hat techniques have become so uniform and ubiquitous in their application that nothing truly “advanced” continues to exist. In today’s post I am going to talk about a technique with which many of you will not be familiar. Here at Virante we call it Second Page Poaching. But, before we begin, let me start with a brief explanation of what I believe to be “advanced white-hat SEO”.

Chances are, unless your site has thousands well-optimized pages in Google, advanced white hat techniques and many others will be useless. Advanced white hat SEO techniques tend to deal with scalable SEO solutions that bring higher search RoI for sites that seem to have reached the peak of potential search traffic. We are not talking about training a young boxer to become a contender. We are talking about turning a contender into a champion. When your PR7 eCommerce site is competing against other PR7 eCommerce sites for identical products and identical search phrases, all traditional optimization techniques (white, black, gray, blue hat, whatever) tend to fall by the wayside. Why buy links when you already have 100K natural inbounds? Why cloak when you have tons of legitimate content? This is where advanced white hat SEO kicks in. These are techniques which can bring high RoI with little to no risk when scaled properly. The example I will discuss today, “Second Page Poaching” is highly scalable, easily implemented, and offers a high Return to Risk ratio.

What is Second Page Poaching

the coordination of analytics (to determine high second-page rankings) with PR flow and in-site anchor-text to coax minor SERP changes from Page 2 to Page 1.

Why Second Page Poaching

We need to recognize that most on-site SEO techniques, especially PageRank flow, will only increase rankings by a single position or two. If you have a well optimized site, even providing a sitewide link to one particular internal page is unlikely to push it up 5 or 6 positions. Moreover, PR-flow solutions are unlikely to move a page from position 3 to position 2 or 1, where competition is more stiff. Instead, we would like to target the pages that will see the greatest traffic increase from an increase of 1 or 2 positions in the search engines.

Looking at the released AOL search data, we can determine which positions are most prime for “poaching”. We use this term because we are hunting for pages and related keywords on your site that meet certain qualifications.

Below is a graph showing the relative percentage increase of clickthroughs based on location in the top 12 in AOL’s released data. As you will see, there are spikes at moving from position 2->1, positions 11->10 and 12->11, 3->1, 11->9 and 12->10.

If we look at the data directly, you can see that the increases are in the 500% range or greater for moving from the top of the 2nd page to the bottom of the first. More importantly, as previously discussed, it is unlikely that PR-Flow methods will help you move from #2 to #1, given the competitiveness. But will that little bit of PageRank boost help you move from 11 to 10? You betcha! Simply put, if you can move hundreds of pages on your site from ranking #11 to #10, you will see a 5 fold increase in traffic for those keywords. If you were to do the same to move them from #7 to #6, you would barely see an increase at all.

Data Provided by RedCardinal

Now, as an advanced technique, it is important to realize that this becomes incredibly valuable when a site already has tens if not hundreds of thousands of pages. If you bring in 10,000 visitors a month from Page 2 traffic, you could see your traffic increase by 40,000 fairly rapidly. If you are a mom-and-pop shop and have 10 visitors from Page 2 traffic, you might only see 20 new visitors, as your site’s internal PR will be less capable of pumping up the rankings for those Page 2 listings.

Step 1: Data Collection

For most large sites, data collection is quite easy. Simply analyze your existing log files or capture inbound traffic on the fly and record any visitor from a Page 2 listing. Identify all inbound referrers that include both “google.com”, “q=” and “start=10” Store the keyword and the landing page. Make sure your table also stores a timestamp as well, as frequency will matter when we make future considerations of which keywords to poach. If your log files store referrer data, it may be useful to go ahead and include historical data rather than starting from scratch. A suitable site should find hundreds if not thousands of potential keyword/landing page combinations from which to choose.

Step 2: Data Analysis

Because this step will be the most processor intensive, it is important to prioritize. In the Data Analysis component, we will judge the keyword/landing page combinations based on several characteristics. Because we only want to try to poach keywords for which we already rank #11 or #12, we will have to perform rank checks. In the interest of lowering what could potentially be a large computing burden, we should first consider the other metrics and then choose from that group which keywords we will check for rankings. We will consider the following characteristics”

- Frequency: Number of visitors driven per month, the higher the better.

- Conversion Rate: Why poach keywords for pages that convert poorly?

- Sale Potential: Why poach keywords for low RoI goods?

Now, assuming we have a set of keywords ordered by highest conversion, profit and frequency, we run simple rank checking software to identify those keywords for which your site currently ranks #11 or #12. Once that data point is added to the set, we use a formula to determine which keywords are most worth targeting. I will not get into it now, it is kind of proprietary, but you will want to take into account several factors to determine the minimum number of links needed to promote a page from #11 to #10 or #12 to #10. Once complete, you should now have our list of hundreds if not thousands of “keyword/landing page” combinations worth targeting.

Step 3: PR Flow Implementation

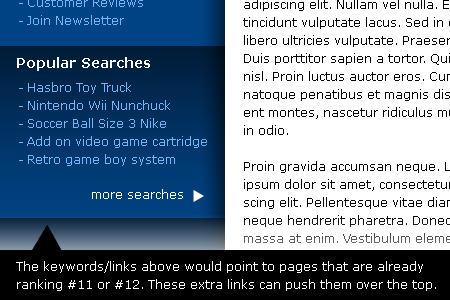

There are many creative ways to add these links across your site, the easiest of which is a simple “Other People Searched For:” section at the bottom of internal pages that list up to 5 alternatives. Your system would then choose 5 pages from the list and add text links with the inbound keyphrase as the anchor-text pointing to these landing pages. If you want to get really crafty, you can use your own internal search to identify related pages upon which to include the different landing page links that occur in your list. Ultimately, though, you will have added a large volume of links across your site which slightly increase the PageRank focused upon these high potential pages ranking #11 or #12. As Google respiders your site and finds these links, these pages will crawl up the 1 or 2 positions needed to quadruple or better their current inbound traffic for particular keywords.

Bear in mind that you risk very little with this technique. PR Flow tends to have little impact on your high-dollar, high-traffic keywords (where inbound links rule the day). Most importantly, because the system is automated, it will allow your internal pages to drop from position 6 to 7, where little to no real traffic is lost, but will capture and restore rankings if any pages ever drop from 9 or 10 to 11 or 12 due to the new internal linking scheme. You will lose PR on pages that have nothing to lose, and gain PR on pages that have everything to win. Magnified across 10,000 pages, and you can see the profits from a mile away.

Step 4: Churn and Burn

This is perhaps the most important part of the process. Continued analysis.

- the system needs to capture any successful poaches and make sure you continue to link to them internally. if the system drops pages once they move from 11 to 10, you have failed 🙂

- the system needs to determine non-movers, pages where internal linking is not improving their position, and blacklist them so you do not continue to waste extra PR flow

- the system needs to continue to replace non-movers with the next best solution, and continue to look for more keyword/landing page options

Summing Up

I know this is a long post, but I feel like it is worth reiterating. Advanced white hat SEO techniques do exist but no one wants to talk about them for the same reason no one wants to talk about advanced black hat techniques. Second Page Poaching is just one of many different options available to large-scale websites looking to gain an edge over their competitors. Many of these techniques are fully automated, easily implemented, and highly scalable. However, most of them are kept under lock-and-key.

25 Comments

Trackbacks/Pingbacks

- » Show fällt heute leider aus! Aber Turnier findet statt! | seoFM - der erste deutsche PodCast für SEOs und Online-Marketer - [...] Second Page Poaching - Heiliger Gral des WhiteHat SEO oder nicht praktikabel? [...]

- Links To Beat The Holiday Blues | This Month In SEO - 12/08 | TheVanBlog | Van SEO Design - [...] Second Page Poaching - Advanced White Hat SEO Techniques [...]

- LJ Longtail SEO | SEO Reports - [...] original idea for this plugin was taken from SEO Booster Lite and Second Page Poaching - Advanced White Hat SEO. I used…

- LJ Longtail SEO | Best Plugins - wordpress – widgets – plugin 2012 - [...] original idea for this plugin was taken from SEO Booster Lite and Second Page Poaching – Advanced White Hat…

- Basic SEO Stuff That Every Best SEO Companies Should Know – Digital Marketing Company | Digital Marketing, Internet Marketing- Yourneeds - […] www.thegooglecache.com/advanced-white-hat-seo-techniques/second-page-poaching-adanced-white-hat-seo-… […]

That’s a cool technique if it’s applicable. But to take your example:

A #12 term that brings 10k visits per month would bring 1,433,333 at #1 or 47,777 searches per day.

I’d wager that there are less than 20 e-commerce specific search strings that fit the bill.

However, if you’re talking about site aggregate page 2 traffic for non unique e-commerce search strings (say ones with 100 or more searches per month) that on a single site totaling over 10k per month, then it makes more sense.

If you have a program that automates the page rank sculpting dynamically on a daily basis based on terms that fit that criteria (by checking the SERPs and allotting the necessary links across the entire site) – then kodos! that would be worthwhile.

Superb write-up of a great technique – a great read!

This is certainly a piece that will be safely bookmarked…thanks a lot!

@quadzilla fancy writing the app and sharing?!

p.s. there’s a typo in the title: ‘Adanced’ as opposed to ‘Advanced’

A good post, nice reveal – the key is writing the automation so it can be streamlined on a mass scale.

Ultimately theres levels, the USA has CIA and the web has all kinds of hats.

OK here’s the math:

We tested on one site with 500k G searches per month and another with 2.5 Million G searches per month. We found that searches that fit the guidelines of “more than 10 searches per month, ranks 11-30” accounted for 0.15% and 0.38% of total searches respectively.

Assume you would get a 10 fold increase by taking a serp from 21 to 10, or a 5 fold increase from bring it from 11 to 9 we get the ranged of increase on the effective searches of 5-10 fold.

So at you’re looking at anywhere from a 0.65%-3.8% increase in traffic by successfully implementing this tactic.

First, it is unlikely that you have accurate rankings for the hundreds of thousands of longtail keywords of which we are speaking. Unless your systems can grep through your logs (and analyze them) which include not only 5 million search entries but all of the type in and referral traffic as well in a matter of hours, then it sounds to me like you are probably using a sampled data set to make your claims. Unless, of course, you are using Google Webmaster Tools’s abridged data.

More importantly, I am not sure what you mean by more than 10 searches a month. Are you referring to the number of search referrals for that keyword you receive each month? or the actual number of searches performed each month? My guess is you are referring to the first option, which would be a gross error. We are not limiting ourselves to a minimum number of searches per month, rather ordering them by that data. Considering a simplified version of this could be developed in a matter of hours, it makes no sense to ignore large volumes of keywords that you may only quadruple from 2 to 8 visitors a month. For example, a site I am looking at right now receives approximately 5.3% of its search traffic from Page 2 keywords. Accomplishing a poach at a 4x interval (smaller than what your calculations use), we would look at a 17+% increase in search traffic via this method – although at the moment we are seeing slightly less than that about about 14%. It depends on how top-heavy the site is for search (dependent upon long-tail or massive keywords).

Look Quad, I understand that this technique isn’t perfect and doesn’t work in all circumstances – the right client, and the right situation. But traditional link building and optimization don’t work for your a massive site like reviews.cnet.com – could you imagine a site like that trying to choose a handful of heavy hitter keywords and ranking for them, while ignoring the potential rankings for literally millions of long-tail keywords?

I am not trying to argue here, but I stand by my simple, still-to-be-refuted claims: Advanced White Hat SEO does exist. This is an example.

@ ben – yea, we’ll probably write it.

“Increase your G traffic by up to 3.8%!!”

Think that headline will sell? 🙂

i wasn’t trying to argue either and apologize if it came off that way- I too appreciate you sharing this technique – i like it; I’d even concede that it’s both advanced and white hat.

It could be that our data is skewed away from the long tail because we are so successful at competing at the short head of the tail. Or it could be that you see that much of a jump from going down below 2 G search referrals per month;

No we didn’t do a sample set – we looked at the prior 30 days. Yes, we used Google analytics data, and we yes discarded unique strings. I still think you should discard unique strings; aren’t a majority of searches unique? meaning they won’t be searched for again? [site needed]

I’ll see what happens if we go back on a year’s worth of data.

If you say you’re getting ~14% returns from this technique – I’d think it was a little on the high side, but I certainly wouldn’t call you a liar.

Great article (and no, it’s not that long, I’ve seen longer posts. 😉

Why not just concentrate on getting more links to those internal pages from other sites? Many ecommerce sites don’t have a lot of internal links to their product pages, and most likely just two or three additional links will bring them from page 2 to page 1.

Just fabulous; it’s so rare we see any new white hat stuff out there. Thanks for sharing… great idea and very useful for seeding some new ideas. I’ve been tinkering with the idea for a white hat onpage optimization tool in the same general arena (but more useful on smaller sites), this may be the impetus I need to get it going.

In any event this is nice to see because there’s not enough onpage optimization discussion anymore – it kinda died out with keyword density didn’t it?

I love your take on advanced white hat and agree that you should never stop trying to optimize every single little thing you can on an already well-ranking website. Thanks for the insight.

Mary

Thanks for the tip on adding popular searches. I have several pr 3 and 4 pages I can be using to build links to my target pages. I’ bet it will work great!

You just made my head spin… I will see to implement it on some of my slower moving sites. Thanks. And no, the article was not too long 🙂

One of my sites falls into the group that could benefit from such a change.

Would appreciate additional detail on data collection…

What is your definition of White Hat? I know Google’s definition is techniques done to your pages for the benefit of your visitors, not for the benefit of page rank.

That means any discussion with the goal of increasing page rank is not purely white hat.

Your post just made my day – so glad I got to read it,

It’s good to see that there are still people out there willing to perform white hat seo. As much as I love reading Quadszilla and black hat SEO techniques, I’m always on the lookout for truw SEO specialists. These are the ones who will deliver the best search results! Cheers dude

When i read this post, I immediately thought of another, similar one, that explains how to set up Google Analytics filters to find the second page keywords to target: http://jamesmorell.com/second-page-keyword-targeting/

I thought i’d post it now before i forget about it 🙂

Great post btw

indeed this is really interestic method, ive never tried that and i didnt thin kwhitehat can suprise me with anything

If you have a program that automates the page rank sculpting dynamically on a daily basis based on terms that fit that criteria (by checking the SERPs and allotting the necessary links across the entire site) – then kodos! that would be worthwhile.Thanks for sharing.

I’ll be trying this for some websites and see how it works out.

Amazing to think that such a small change in rankings can have such a massive effect, but obviously makes sense! Thanks for the tips, I’m now off to get all my pages that are on page 2, to page 1, lol!

PageRank sculpting mechanism that is based on population density?

I’ve never heard anything like that before. How does it work?

So at you’re looking at anywhere from a 0.65%-3.8% increase in traffic by successfully implementing this tactic.

Some decent advice there, nice one 🙂